AI Agents

Build intelligent, autonomous AI agents that can interact with external systems, make decisions, and orchestrate complex workflows using Catalyst's messaging and state management capabilities.

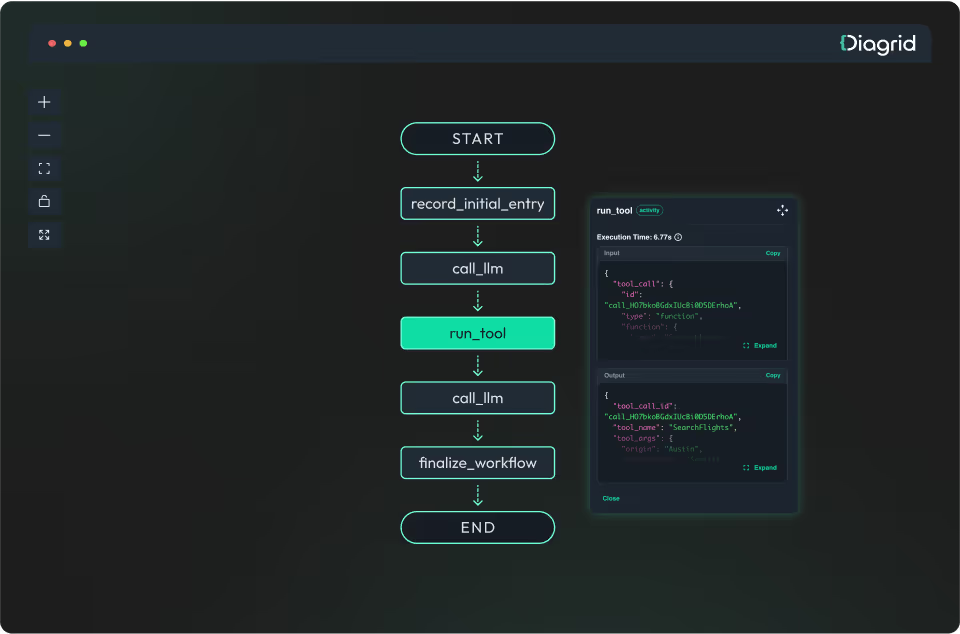

Durable Agents

Catalyst has native support for the Dapr Agent framework, enabling durable agents that persist their execution state across restarts and failures.

Below is an example of a processing order durable agent that can use a process order tool and make decision on approving orders. It uses a configured state store to keep the workflow state and an LLM configured via the DaprChatClient

async def main():

order_processor = DurableAgent(

name="OrderProcessingAgent",

role="Order Processing Specialist",

goal="Process customer orders with appropriate approval workflows",

instructions=[

"You are an order processing specialist that handles customer orders.",

"For orders under $1000, automatically approve them.",

"For orders $1000 or more, escalate them for manual approval.",

"Use the process_order tool to handle order processing.",

"Provide clear status updates to customers."

],

tools=[process_order],

# Conversation history store

memory = ConversationDaprStateMemory(

store_name="statestore",

session_id="customer-session-123"

),

# Dapr conversation api for LLM interactions

llm = DaprChatClient(

component_name="openai-gpt-4o",

),

# Execution state (workflow progress, retries, failure recovery)

state_store_name="statestore",

state_key="execution-orders",

)

Key capabilities:

- Durable executions - Every step in the agent's reasoning and execution is automatically saved, allowing the agent to recover from failures without losing progress or repeating expensive LLM calls

- Built-in resiliency policies - Leverage Dapr's resiliency policies to automatically retry failed operations and recover from transient failures in external systems or LLM APIs

- Complete observability - Gain full visibility into each step of the agent's execution, including timing information, inputs, outputs, and the decision-making process at every stage ** Agent identity** - Catalyst assigns a unique identity using an x.590 certificate that controls its access rights to resources and ensures that it has a security boundary or what it can accomplish.

Pluggable Memory and Context

AI agents need to retain context across interactions to provide coherent and adaptive responses. Catalyst provides a pluggable memory architecture that allows agents to store conversation history and context in various state stores. Below is an example of configuring memory for a durable agent. This allows the agent to persist conversation state in a Dapr state store rather than keeping it in memory.

memory = ConversationDaprStateMemory(

store_name="statestore", # Maps to your Catalyst state store component

session_id="customer-session-123"

)

Rather than being limited to volatile in-memory storage, agents can use any of the Dapr state store components as their persistent memory implementation. This gives you access to 28+ different state store providers, from Redis and PostgreSQL to AWS DynamoDB and Azure CosmosDB, enabling you to choose the right persistence store for production.

Swappable LLM Providers

Catalyst supports the Dapr Conversation API as an LLM abstraction layer, enabling agents to switch between model providers while gaining built-in response caching, PII protection, and resilience. Here is how to configure an LLM provider for a durable agent using the Dapr Conversation API abstraction.

from dapr_agents import DaprChatClient

llm = DaprChatClient(

component_name="openai-gpt-4o", # Maps to your Catalyst conversation component

)

Key benefits:

- LLM abstraction - Swap providers and models without changing agent code

- Prompt caching - Reduce latency and costs for repeated calls

- Security and PII obfuscation - Protect sensitive data automatically

- Retries, timeouts, and circuit breakers - Built-in resiliency

- Tracing and metrics - OpenTelemetry and Prometheus support

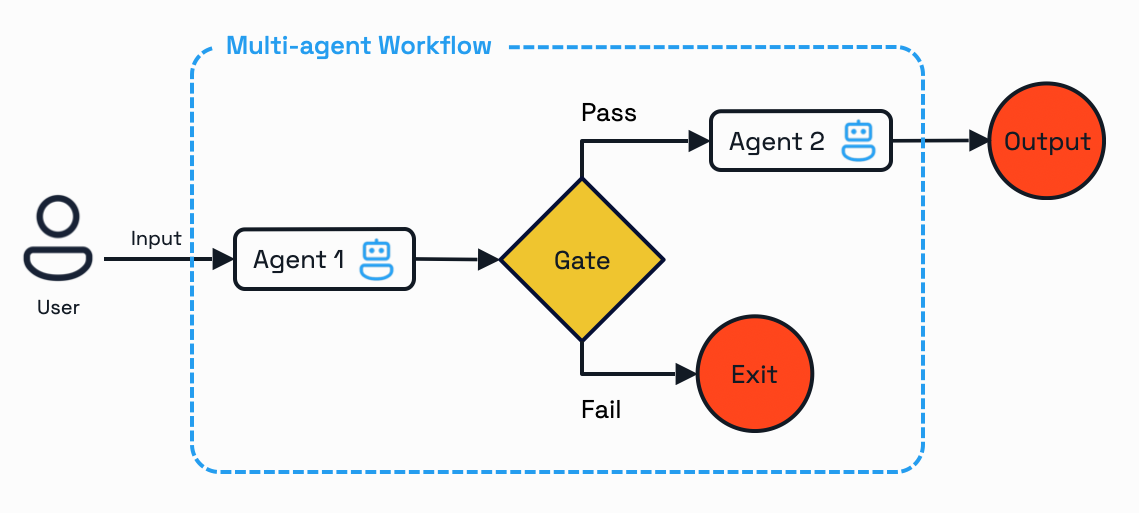

Multi-Agent Workflows

Beyond standalone agents, Catalyst enables you to embed LLM-powered reasoning and agent logic directly within deterministic workflow orchestrations. This approach combines the structure and predictability of traditional workflows with the intelligence and adaptability of LLMs, creating systems that are both reliable and context-aware.

Dapr Agents provides extensions that make it easy to integrate LLM calls or agents as workflow activities. Below is a workflow demonstrating how to orchestrate two agents with a conditional gate between them.

# --------- AGENTS ---------

triage_agent = Agent(

name="Triage Agent",

role="Customer Support Triage Assistant",

goal="Assess entitlement and urgency.",

instructions=[

"Determine whether the customer has entitlement.",

"Classify urgency as URGENT or NORMAL.",

"Return JSON with: entitlement, urgency.",

]

)

expert_agent = Agent(

name="Expert Agent",

role="Technical Troubleshooting Specialist",

goal="Diagnose issue and propose a resolution.",

instructions=[

"Use the provided customer context and issue description.",

"Summarize the resolution in a customer-friendly message.",

"Return JSON with: resolution, customer_message.",

]

)

# --------- WORKFLOW ---------

@workflow(name="customer_support_workflow")

def customer_support_workflow(ctx: DaprWorkflowContext, input_data: dict):

triage = yield ctx.call_activity(triage_activity, input=input_data)

if not triage.get("entitlement"):

return {"status": "rejected", "reason": "No entitlement"}

expert = yield ctx.call_activity(expert_activity, input=input_data)

return {"status": "completed", "result": expert}

@activity(name="triage_activity")

@agent_activity(agent=triage_agent)

def triage_activity(ctx) -> dict:

"""Customer: {name}. Issue: {issue}."""

pass

@activity(name="expert_activity")

@agent_activity(agent=expert_agent)

def expert_activity(ctx) -> dict:

"""Customer: {name}. Issue: {issue}."""

pass

This architectural pattern is ideal for business-critical applications where you need the intelligence of LLMs combined with the reliability and observability that Catalyst workflows provide.

The following patterns demonstrate common agentic orchestration scenarios that can benefit from Catalyst's workflow capabilities.

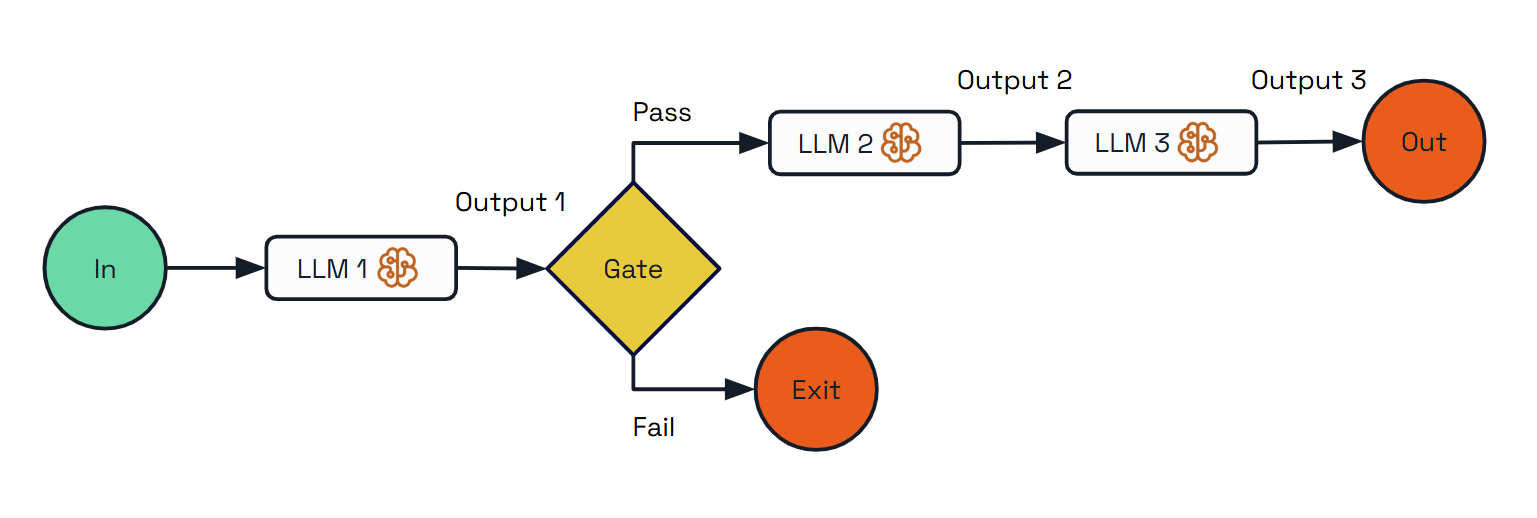

Prompt Chaining

Decompose complex tasks into sequential steps where each LLM call processes the output of the previous one. Enables better control, validation between steps, and multi-stage analysis.

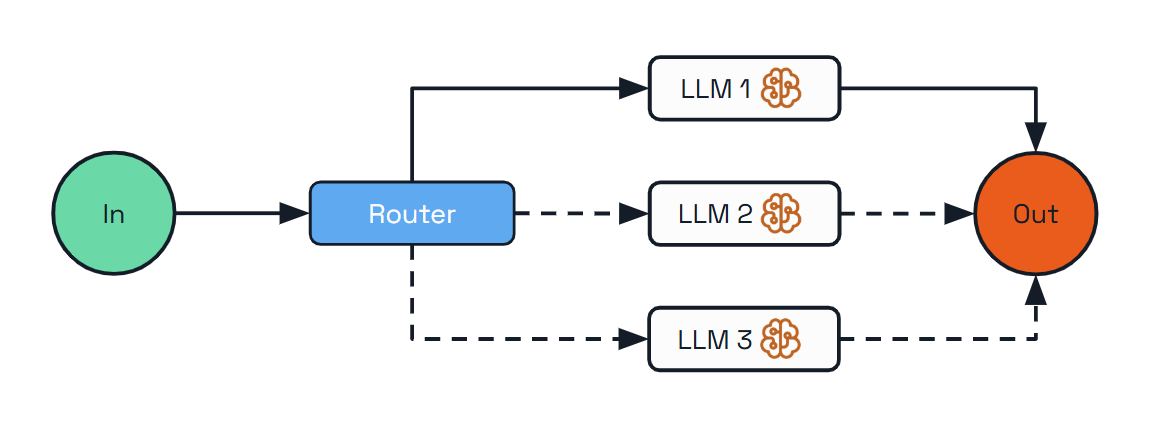

Read more →Routing

Classify inputs and direct them to specialized handlers for different query types. Enables resource optimization, and specialized experts for customer support and content creation.

Read more →Parallelization

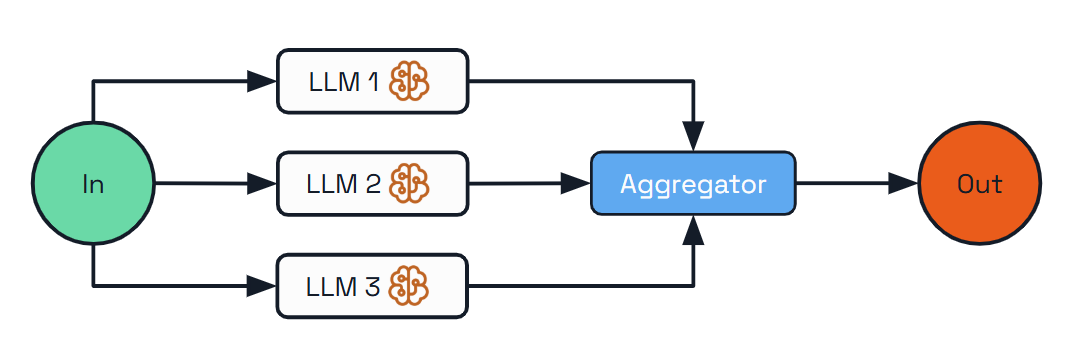

Process multiple dimensions of a problem simultaneously with outputs aggregated programmatically. Improves efficiency for complex tasks with independent subtasks that can be processed concurrently.

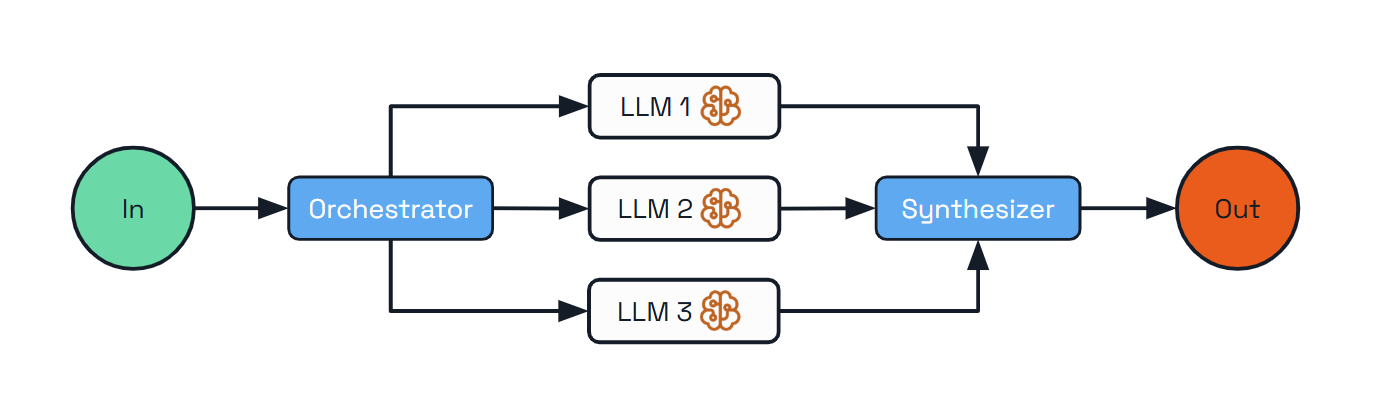

Read more →Orchestrator-Workers

A central orchestrator LLM dynamically breaks down tasks, delegates them to worker LLMs, and synthesizes their results. Ideal for highly complex tasks where the number of subtasks is not known in advance.

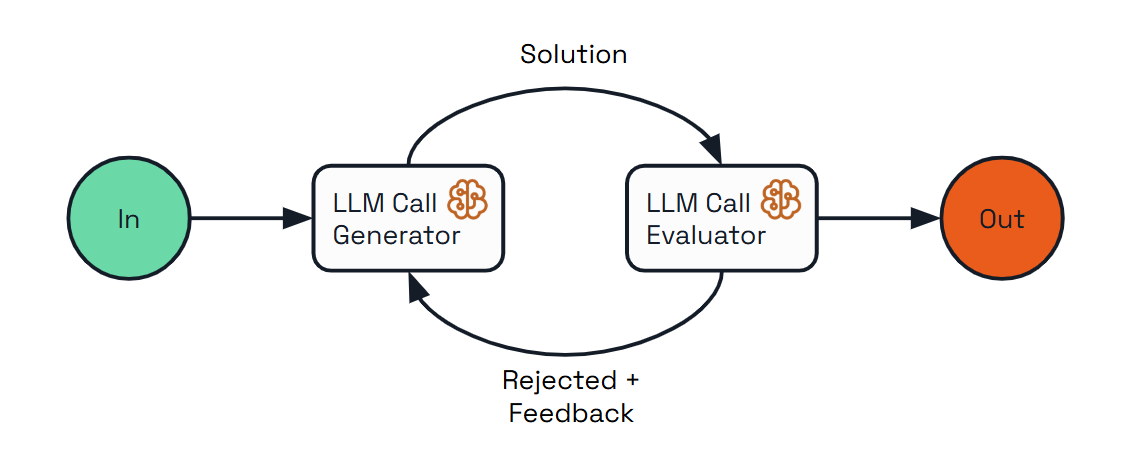

Read more →Evaluator-Optimizer

A dual-LLM process where one model generates responses while another provides evaluation and feedback in an iterative loop. Achieves quality through iteration and gradual refinement.

Read more →Identity and Security

Security is center for agents in Catalyst. Every application in Catalyst, such as a durable agent, or a workflow with multiple agents, automatically receives a secure, verifiable identity based on SPIFFE's application identity model. Every application with this verifiable identity has an x509 certificate automatically issued and regularly rotated by the platform. Critically these agent identities establish trust between agents and other applications, secure all communication, and eliminate the need to distribute long-lived credentials across code or infrastructure.

The identity provide a security boundary that restricts an agents' access to resource, authenticates to other agents and infrastructure. This means that Catalyst agents and multi-agent workflows are highly secure.

Key capabilities:

- Secure app-to-app (or agent-to-agent) communication – Short-lived mTLS certificates ensure encrypted traffic and automatic mutual authentication between applications, with continuous rotation reducing exposure from compromised keys.

- Authorization & access control – Identity-based policies define which agents may call one another, what operations each can perform, what infrastructure each can access, and preventing unauthorized access.

- Cross-cloud identity federation – Integrates with AWS, Azure and GCP identity providers so agents can authenticate to cloud services without storing or distributing long-lived secrets.

- Centralized credentials – When required, API keys (such as LLM credentials) are stored and managed at the platform layer rather than in application code, preventing credential sprawl and enabling secure, unified access to multiple LLM providers.

- Auditing & traceability – All operations are tied to workload identity, enabling clear end-to-end audit trails for debugging, compliance, and forensic analysis.

- Personally identifiable information (PII) obfuscation – Detects and removes sensitive user data in LLM inputs and outputs, protecting privacy.

Learn how to configure Catalyst with AWS in the Components Authentication Guide.

Getting Started

Get started running your first durable agent with Catalyst. Then dive into more Dapr Agent quickstarts. Find out more with a deep dive into the Dapr Agent documentation.